Unreal Engine Metahuman Facial Motion with Faceware Mark IV and Glassbox Live Client

This was my first attempt at trying to capture 1:1 facial motion with Metahumans in Unreal Engine. What I loved about this experience was that I was able to do everything entirely in Unreal.

Throughout this process I developed a method of fine-tuning facial motion thanks to the face control rig board, that I will be sharing in an upcoming tutorial.

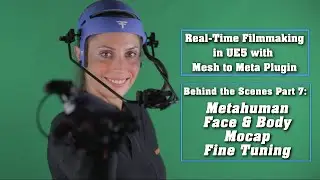

Equipment: I captured the face and body motion simultaneously with the Mark IV HMC by Faceware and the Xsens Link suit/Manus Prime II gloves.

I streamed in the facial motion directly into Unreal via the Glassbox Technologies Live Client plugin from Faceware Studio.

All of this, being powered by a custom Puget Systems workstation with an NVIDIA RTXA6000.

Thank you to Epic Games and 3Lateral for making these high fidelity Metahumans and the incredible tools in Unreal Engine available to everyone.

Thank you to everyone at Faceware (Catarina M Rodrigues, Joshua Beaudry, Karen Chan (she/her) and Pete Busch), at Xsens (Katie Jo Turk), Manus (Arsène van de Bilt), Glassbox Technologies (Norman Wang and Johannes Wilke) and at Puget Systems.

Thank you to my Unreal Fellowship collaborators for your troubleshooting assistance, Gabriel Paiva Harwat and Diana Diriwaechter.

Thank you to Bernhard Rieder for your lighting assistance, and to Daniel Rodriguez Cadena for customizing the female character body textures. And thank you to Pixel Urge for modifying the character body to allow for the metahuman face to be attached and for teaching me how to modify the Metahuman face textures in Substance Painter. You rock!

Female Robot character by Michael Weisheim Beresin

Music by Epidemic Sound

#unrealengine #metahumans #facemocap #motioncapture #virtualproduction #nvidiartx #pugetsystems #epicgames

#mocap #xsens #faceware #metaverse