Unreal Engine Metahuman & Facial Motion with Faceware Mark IV HMC Test

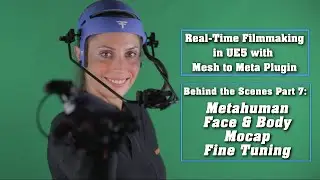

A short clip from my upcoming short film, as I recently received the MarkIV HMC by Faceware and this is one of the first tests I did using it to stream in data directly into Unreal. I am so grateful to be able to use this incredible tool for facial motion with the Metahumans.

A quick update:

I have been working on a short film made entirely in Unreal Engine using motion capture tech from Xsens, MANUS™ and Faceware with the Glassbox Technologies Live Client plugin (and the new Faceware 4.27 Live Link plugin) and capturing most of my cinematics with GlassboxTech's Dragonfly virtual camera tool.

All of this is being powered by Puget Systems custom workstation with the NVIDIA Design and Visualization RTXA6000.

I want to share everything I am learning with the virtual production community, so I will be doing a breakdown of the clips from my film, sharing my process, best practices and in-depth tutorials.

Thank you to everyone at Xsens (Katie Jo Turk), Manus (Arsène van de Bilt), Faceware (Catarina M Rodrigues, Karen Chan (she/her) & Pete Busch) and GlassboxTech (Norman Wang, Johannes Wilke & Mariana Acuña Acosta) for teaching me how to use these tools so that I can share this knowledge with others.

Credit for character body modifications: Daniel Rodriguez Cadena (character textures), Pixel Urge (face removal) and original character by Michael Weisheim.

Link to my Discord: / discord

#unrealengine #virtualproduction #motioncapture #nvidiartx #pugetsystems #epicgames #metahumans #mocap

#xsens #manumeta #faceware #glassboxtech