How To Web Scrape Data And Store It In A CSV File

Created and recorded by Vivek Jariwala in June 2022.

Music: Twilight Area by Alfred, https://lmms.io/lsp/?action=show&file...

Sample URL used in the video:

https://www.canadapost-postescanada.c... archived at https://web.archive.org/web/202206110...

Do you want to create a side project that uses some content from other websites? Or do you want to collect information and check when it updates, such as checking if the price fluctuates on a product you want over time? Maybe you just want to store information from a website and manipulate it within a CSV file? These are all applications of programming that would involve web scraping. Web scraping is an extremely powerful and versatile application of programming that allows you to collect, store, and manipulate information presented on a website. Today I am going to explain the process of how to make a web scraper in Python using the Beautiful Soup and Requests Libraries, that then formats the collected data into a CSV file. The website I will demonstrating this with is postage rates listed on the Canada Post website, a simple use case where you may want to quickly check what the current postage rates are, especially if they change during the course of the year.

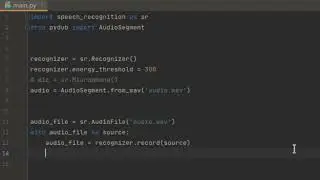

So to begin the program, lets first import the libraries we are going to be using today. Today, we will be using the Requests library, the Beautiful Soup library (specifically beautiful soup 4), and of course the CSV library. The requests library allows us to perform HTTP operations and send HTTP requests. The Beautiful Soup library allows us to get data from HTML files.

Lets create a beautiful soup object that will allow us to interact with the HTML script of the website.

Now lets create some arrays to store the information we want to collect. So based on this table, we want to collect "weight" and "prices"

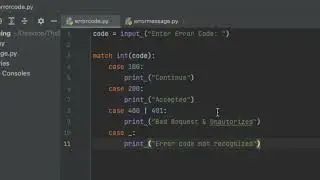

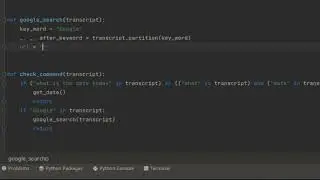

Now we create the for loop that cycles through each line of this table, and stores the relevant information into arrays. The HTML class we want to filter for is cpc-postage-table, which is also the class that we see the table is under in the HTML script when we click inspect element. The "find_all" method helps us make sure we are searching for every occurrence of the "cpc-postage-table" class in the HTML script. Now we want to just store the text displayed on the website into a string variable. Therefore, we created a string variable called "string", and then stored the text itself using the ".text" method. We can also pass a parameter, such as "p", which is the paragraph tag that is used within the "cpc-postage-table" class to write the weight amounts that is then displayed. This parameter lets us extract only that text and not the other text in the class that is separated using its own tags. Now we want to input this string value of the text we want into the weight array, so we use .append method and pass the string variable as the argument in the brackets. The ".strip()" method lets us remove the empty spaces before and after the text.

We use the exact same logic for the next for loop that we use for to extract the prices. However, I want to point out some key differences that I had to implement and you likely will also have to make some modifications when you are web scraping a website based on the HTML code of it. So unfortunately the prices are not separated into their own distinct tag that helps differentiate it from the weight amounts. So to circumvent this, this time I did not include the "p" paragraph tag as a parameter to filter. Instead I just extracted all the text from within that class. Within that text, the price amounts were there. However, since I just want the price amounts, I created a new variable called "substring" and then used the .rpartition method to cut out the part of the text that I wanted, which is the price amount. These price amounts have a dollar sign in front of them, so I passed the dollar sign symbol as the argument. I also wanted to extract the characters after the dollar sign, which would be the prices, so I put the number 2 in the square brackets next to this expression for the number of characters I wanted to extract after that. And once again, I appended this into the array, but this time I appended this "substring" variable.

I created a string variable to store the name of my csv file. I then used the methods from the csv library to set up an excel file and to write in it, using the ".write" methods. I use ".writerow" to create the header of the table at the top of the file, so I did index number, weight, and price. I then used a for loop to cycle through the arrays I created and print out each string value located in their indexes into a row on the excel file. And thats it.

Lets run the program, and then open the excel file to see if it did it correctly, and it did!