The Successor Representation: Its Computational Logic and Neural Substrates

This is a talk I gave on Sept 13th 2019 at the neural AI reading group at Mila.

Paper details:

The Successor Representation: Its Computational Logic and Neural Substrates

Samuel J. Gershman

Journal of Neuroscience 15 August 2018, 38 (33) 7193-7200; DOI: https://doi.org/10.1523/JNEUROSCI.015...

Paper link: https://www.jneurosci.org/content/38/...

Abstract:

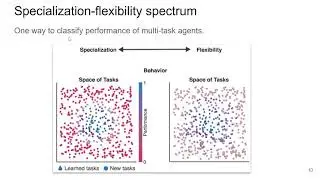

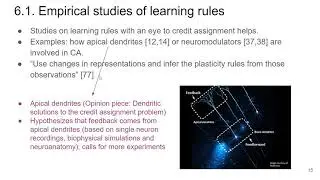

Reinforcement learning is the process by which an agent learns to predict long-term future reward. We now understand a great deal about the brain's reinforcement learning algorithms, but we know considerably less about the representations of states and actions over which these algorithms operate. A useful starting point is asking what kinds of representations we would want the brain to have, given the constraints on its computational architecture. Following this logic leads to the idea of the successor representation, which encodes states of the environment in terms of their predictive relationships with other states. Recent behavioral and neural studies have provided evidence for the successor representation, and computational studies have explored ways to extend the original idea. This paper reviews progress on these fronts, organizing them within a broader framework for understanding how the brain negotiates tradeoffs between efficiency and flexibility for reinforcement learning.

==

This talk wasn't as good as my other talks, here are some things I learnt:

Do more dry runs before the talk. (did just one)

Explain as you would to a 5-year old. Expect all questions that might come up, have answers to them.

Show everything visually. When you want to skip through something, enough provide detail on the slides for people interested to refer later.

Reimplement all small experiment that you show on your talk

![What does it mean to understand a neural network? [dry run]](https://images.videosashka.com/watch/TQU6bx5k_4U)