A Smart Guidance System for the Visually Impaired

Project: A Smart Guidance System for the Visually Impaired

By: Nameera Bhugalee

BSc Computer Science (2020) University of Mauritius

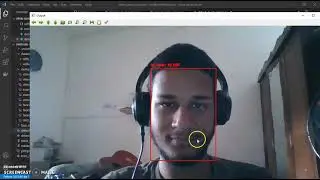

A system is developed that performs object detection in real-time using a quantized Mobilenet SSD model trained on the COCO dataset. The object detection model is trained to detect and classify 80 different classes of objects. In this project, Tensorflow lite APIs have proved to be suitable like object detection API. This trained model contains labels that classify the class of objects they represent and the data specifying where each object lies in the image. On a mobile phone, the results are interpreted by a drawn bounding box around the object, label of the object as well as its confidence score. A threshold is set to either validate a detection or to discard. This threshold helps identify false positives (wrongly identified objects). Also, Android Speech API was used to perform text-to-speech and speech-to-text. This API streams audio to remote servers and performs speech recognition. Another feature in the system is face recognition that is implemented using a lightweight framework called BlazeFace which runs at a speed of 200-1000+ FPS on mobile devices. To obtain an accurate and robust system, it was tested in different rooms and different scenarios were set up. Also, some variables (for e.g. luminosity, distance, number of projects present and the position of the smartphone) were varied to properly evaluate the performance of the system.