What is attention mechanism|python code to implement attention mechanism using keras

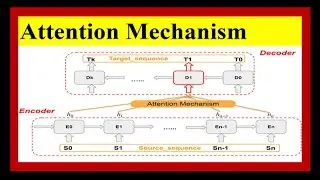

what is attention mechanism

simplest python code to implement attention mechanism using keras framework

what is soft attention layer

what is seq2seq model

first install keras

import necessary libraries

define training data

prepare data for the model

a) convert phrases to sequence of integers

b)pad the sequences to have the same length

Create a sequence to sequence model with attention mechanism

compile the model

Define the batch size and number of epochs

Convert the target sequence to one hot vectors

train the model

reverse one hot encoding to reverse translated phrases

print the results

#attention

#sequence

#english

#spanish

#encoder

#decoder

#ai

#pythoncoding

#pythonprogramming

#python

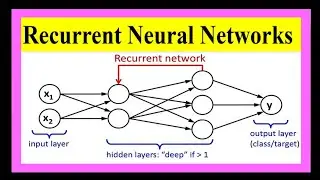

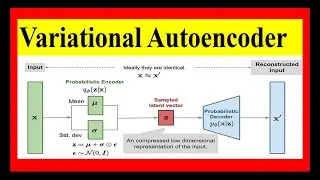

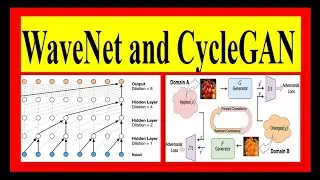

#machinelearning

#deeplearning

#transformers