Pre training LLM vsFine tuning LLM using BERT with python code

what is pretraining LLM

what is fine-tuning LLM

explaining pretraining using BERT example

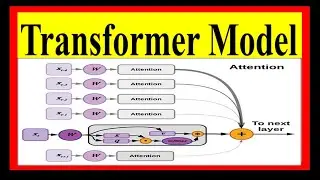

what is Bidirectional encoder representations from transformer (BERT)

python code for pretraining LLM

what is fine-tuning LLM

python code for fine-tuning LLM

difference between pretraining LLM and fine-tuning LLM

#roberta

#pretraining

#largelanguagemodels

#finetuning

#nlp

#bidirectional

#transformers

#pythonprogramming

#python

#pythoncoding

#pythontutorial

#pythonprojects

#pythonforbeginners

#sequence

#training