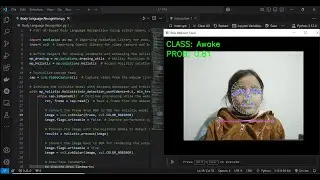

P10: AI-Based Body Language Recognition Using scikit-learn, OpenCV, and MediaPipe

#MediaPipe #Python # Artificialintelligence #Tech

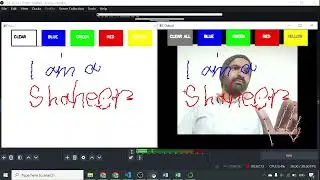

This project delves into the exciting domain of AI-powered body language recognition, leveraging cutting-edge tools to create a system capable of identifying gestures and interpreting body movements. By integrating powerful libraries like OpenCV, MediaPipe, and scikit-learn, this project demonstrates how machine learning can decode non-verbal communication with precision and efficiency.

🔧 Tools and Libraries Used

• Python 3: The foundational programming language for this project, chosen for its simplicity, flexibility, and vast ecosystem of libraries tailored to machine learning, computer vision, and data analysis.

• OpenCV: A state-of-the-art open-source library for computer vision tasks. It is the driving force behind the system’s capabilities in real-time video processing, image analysis, and detecting movements like gestures or hand positioning.

• MediaPipe: A pre-trained machine learning framework developed by Google that specializes in hand tracking, pose detection, and gesture recognition. MediaPipe provides a highly efficient and accurate solution for identifying hand landmarks and analyzing body language.

• scikit-learn: A versatile machine learning library in Python used to develop and train the custom model for classifying body language gestures. It enables powerful model training and optimization, ensuring the system achieves reliable performance.

📋 Project Workflow

1. Install and Import Dependencies: Start by setting up the environment with the necessary libraries and tools to ensure a smooth development process.

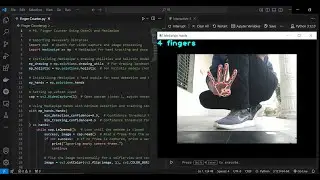

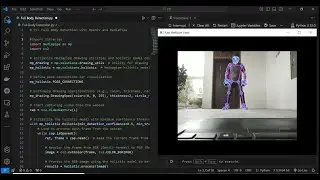

2. Make Some Detections: Use OpenCV and MediaPipe to perform initial hand and pose detections on live video or image input, laying the groundwork for further processing.

3. Capture Landmarks & Export to CSV: Extract key hand and pose landmarks, such as joint positions, and save them into a structured format (CSV) for training the machine learning model.

4. Train Custom Model Using scikit-learn: Build and train a classification model using the collected landmark data. Scikit-learn is used for model creation, training, and fine-tuning to ensure the system can accurately interpret gestures.

5. Make Detections with the Model: Deploy the trained model to make real-time predictions on new input, enabling the system to recognize and classify gestures or body language in dynamic environments.

✨ Let’s Collaborate!

I’d love to hear your thoughts! Whether it’s feedback, feature suggestions, or entirely new use cases, let’s explore these ideas together. Collaborate with me to push the boundaries of what AI-powered body language recognition can achieve. 😊

🔗 Explore the project here: https://github.com/iamramzan/P10-AI-B...