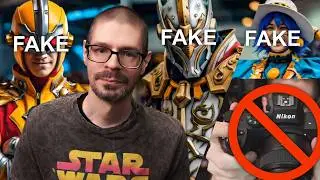

META training AI with our cosplay photography? 📷🤖

I enjoy doing people photography in the form of photographing cosplay and events. I'm more on the documentary side, so I like going to anime, sci-fi, and related conventions to take photos. Though, I've dabbled in photoshoots and more detailed forms of cosplay photography over the years which can sometimes mix reality and art. Even so, a photograph in that case still has some connection to reality with heavy modification. With the advent of text to image generators, it is clear that many companies have been training on photography of every genre they can get their hands on. Meta is now officially using public posts on Instagram and Facebook to train their generative models, which includes generating cosplay photography and videography from a text prompt. Why are these models being trained on real people? What's the point? What's the value here? In this video I talk about various considerations of photographers and cosplayers being taken advantage of by companies and groups using our work to train their text to image based AI models.

#ai #meta #stablediffusion

Text from my Instagram post:

Meta, give us the option to opt-out of AI training with photographs of people. Along with removing any photos and related metadata from your existing training.

I'm going to frame this in the lens of cosplay and conventional photography.

Why should we be forced to give our real creations up to train these systems with the only other options of going private or leaving Instagram/Facebook? Is it that valuable to Meta? Why?

As a photographer and cosplayer, I see no valid current or future benefit of stable diffusion image generators.

What good is generative AI that assembles images to mimic a photograph? Especially if it is generating a photo of a human. Who benefits from this? People that want to act like a photographer or act like a cosplayer? I'm struggling to understand where the value is for photographers and cosplayers that have helped Instagram and Facebook page galleries be as relevant as they are.

What situation would a photographer or cosplayer want to make a completely generated image in this way? For some form of research? To test posing? To test lighting? I'm not talking about adding VFX or stretching out the borders of a photo, which might have some benefit to us.

Personally, I don't think any of these reasons justify using copyrighted photographs to facilitate these advanced auto-complete systems that generate fake photographs and videos from a text prompt. Even if it were of a level of AGI, I don't need or see any real value in a computer assembling artificial photographs or video clips that would be displayed publicly. Even heavily modified photographs are meant to portray some level of reality. That's the point of a photograph.

What is generated is an assemblage of things that existed, but the output was never a real subject. It was never a real place. It was never a real event. Where is the value to a human in that, let alone a photographer or cosplayer? I photograph real people. I photograph real conventions. There is living history in it. There is humanity in it.

Meta is not even the worst offender here because at least they are being clear what is being used to train and some portion of it is "open source"... but that doesn't make it right. Other diffusion systems, GPTs, or whatever else hasn't likely been clear and are basically stealing photographs. Some of my video work is in EleutherAI's "the pile" dataset that I didn't agree to but that's another subject.

----------------------

References:

https://www.proofnews.org/youtube-ai-...

https://www.proofnews.org/apple-nvidi...

/ c_fhy5tm40i

https://noyb.eu/en/preliminary-noyb-w...

----------------------

Help support this channel by subscribing, giving a super thanks, or signing up for a membership!