LLM Mastery 03: Transformer Attention All You Need

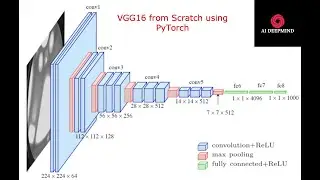

Welcome to the third episode of LLM Mastery! In this video, we delve deep into the core mechanism that makes Transformers powerful: Attention. Understanding how attention works is crucial for mastering Large Language Models (LLMs).

In this episode, you will learn:

Self-Attention Mechanism:

What is self-attention?

How does it work within a Transformer model?

Key steps: Query, Key, and Value vectors

Multi-Head Attention:

Explanation of multi-head attention

How it improves the model's ability to focus on different parts of the input

Positional Encoding:

The role of positional encoding in Transformers

How it helps the model understand the order of tokens

Attention in Practice:

Real-world applications of attention mechanisms

Examples from models like BERT and GPT-3

Why should you watch this video?

Gain a comprehensive understanding of the attention mechanism

Learn how self-attention and multi-head attention work

See practical examples of attention in action

Enhance your knowledge of key concepts in LLMs

Stay Updated:

Subscribe, like, and hit the bell icon 🔔 to stay updated with new lessons. Let's continue this exciting journey and master the power of Large Language Models!

Follow Us:

Instagram: / generativeaimind

Website: https://aiopsnexgen.com

#LLMMastery #AI #MachineLearning #DataScience #NaturalLanguageProcessing #DeepLearning #llm #largelanguagemodels #transformer #attention #ai #ml #dl #GPT #GPT3 #GPT4 #Googlebard

![[AMV] верно, я Кира..](https://images.videosashka.com/watch/8-3v0E_Axvw)