Building Multimodal AI RAG with LlamaIndex, NVIDIA NIM, and Milvus | LLM App Development

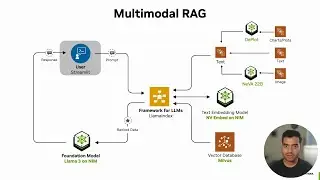

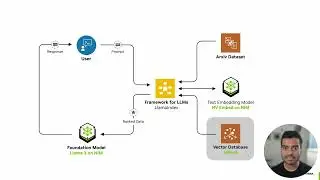

This video explains how to create a multimodal AI retrieval-augmented generation (RAG) application, including the following steps:

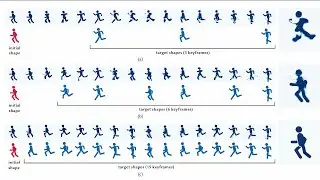

1. Document processing: Convert documents into text form using vision language models, specifically NeVA 22B for general image understanding and DePlot for charts and plots.

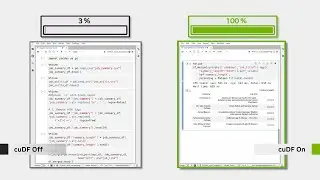

2. Vector database: Explore the power of GPU-accelerated Milvus for efficient storage and retrieval of your embeddings.

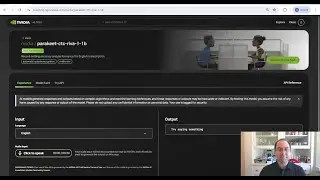

3. Inference with Llama 3: Leverage the NVIDIA NIM API Llama 3 model to handle user queries and generate accurate responses.

4. Orchestration with LlamaIndex: Integrate and manage all components seamlessly with LlamaIndex for a smooth Q&A experience.

📗 Learn more with this notebook: https://github.com/NVIDIA/GenerativeA...

Join the NVIDIA Developer Program: https://nvda.ws/3OhiXfl

Read and subscribe to the NVIDIA Technical Blog: https://nvda.ws/3XHae9F

#llm #llms #llama3 #llamaindex #nvidiaai #nvidianim #langchain #milvus

NVIDIA NIM, code review, LangChain, llamaIndex, Llama 3, Milvus, NIM APIs, Mixtral, NeVA/DePlot