Azure Data Engineering End to End Project || Real Time Project

#AzureDataEngineeringProjectEndToEnd #EndToEndDataEngineering #AzureDataEngineering #AzureDataFactoryTutorial #AzureDataLakeStorageGen2 #AzureDatabricks #AzureSynapseAnalytics #DataEngineeringOnAzure #EndToEndAzureProject #DataTransformationWithAzure #AzureDataEngineeringWorkflow #PowerBIAzureIntegration #AzureDataEngineeringPipeline #DataIngestionAzure #AzureDataEngineeringTutorial #PySparkAzureDatabricks #DataLoadingWithAzureSynapse #IncrementalDataLoading #DataGovernanceAzure #AzureKeyVault #AzureActiveDirectory #AzureDataFactoryToPowerBI

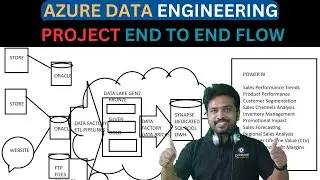

Welcome to our in-depth tutorial where we build a complete end-to-end Azure Data Engineering project! In this video, we’ll guide you through the entire data platform creation process, from data ingestion to reporting, using a suite of powerful Azure tools.

In this video, we cover:

Process 1: SQL Capabilities and Data Loading

Copying Data to Azure Data Lake Gen2:

Learn how to use Azure Data Factory to ingest tables from an on-premise SQL Server database and store the data in Azure Data Lake Storage Gen2.

We will implement incremental loading to ensure only newly modified or added rows are copied based on a timestamp (watermark value).

Creating External Tables in Synapse:

Discover how to set up an Azure Synapse Dedicated SQL Pool.

For each CSV file, we’ll create an external table using PolyBase and perform data joins.

We will demonstrate how to use stored procedures for inserting and updating data in target tables, including procedures like DIMCUSTOMER, DIMPRODUCT, and DIMCATEGORY.

Process 2: Data Flows in Azure Data Factory

Connecting to Raw Data:

Connect Azure Data Factory to your CSV files stored in the Data Lake Gen2 raw container.

Implement Slowly Changing Dimension (Type 1) mechanisms to manage data changes.

Data Transformation and Loading:

Perform insertions and updates of new or modified records into your target tables in Synapse Dedicated SQL Pool.

Process 3: Data Transformation with Azure Databricks

Using PySpark Notebooks:

We’ll create and configure mount points to access raw files stored in Data Lake Gen2.

Read and process multiple CSV files using PySpark, creating data frames for transformation.

Apply joins, transformations, and use merge statements to insert and update data efficiently.

FTP Server Data Handling:

Learn how to manage weekly CSV file uploads from an FTP server, including survey reports and feedback.

Additional Components:

Azure Key Vault: Securely manage and store secrets.

Azure Active Directory (AAD): Implement monitoring and governance strategies.

Interactive Reporting with Power BI:

Integrate Azure Synapse Analytics with Power BI to build dynamic and interactive dashboards that provide insightful reporting.

Key Highlights:

Efficient Incremental Data Loading and Data Transformation.

Data Management using Azure Synapse, Databricks, and Data Factory.

Secure and compliant Data Handling with Azure Key Vault and AAD.

Interactive Reporting with Power BI for actionable insights.

Additional Resources:

Sprint Spreadsheet Tasks

Interview Questions and Answers

Resume Preparation Tips

Real-Time Agile Methodology Examples

Real-Time Scenarios Included:

Handling data from SQL Server, Oracle, and FTP servers.

Implementing Slowly Changing Dimensions (Type 1).

Managing weekly CSV uploads for survey reports and feedback.

Whether you’re a seasoned Azure Data Engineer or just starting out, this video provides hands-on experience and practical knowledge to help you master end-to-end data engineering solutions.

Don’t forget to like, comment, and subscribe for more tutorials on Azure data engineering and related topics!

#AzureDataFactory #PowerBI #AzureDataEngineer #EndToEndProject #AzureDataEngineering #AzureDatabricks #AzureSynapseAnalytics #AzureDataLake #DataLake #AzureKeyVault #AzureActiveDirectory #DataEngineering #PySpark #SQLServer #FTPServer