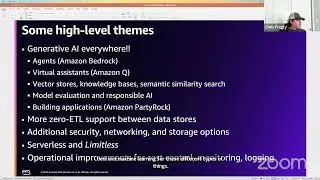

From RLHF with PPO/DPO to ORPO + How to build ORPO on Trainium/Neuron SDK

RSVP Webinar: https://www.eventbrite.com/e/webinar-...

Talk #0: Introduction

by Chris Fregly (Principal SA, Generative AI) and Antje Barth (Principal Developer Advocate, Generative AI)

Talk #1: Human Alignment with Reinforcement Learning from Human Feedback (RLHF) with both PPO and DPO

by Antje Barth (Principal Developer Advocate, Generative AI)

Proximal Policy Optimization (PPO): https://arxiv.org/pdf/1707.06347, 2017

Direct Preference Optimization (DPO): https://arxiv.org/pdf/2305.18290, 2023

Talk #2: From RLHF with PPO/DPO to ORPO + How to build ORPO on Trainium/Neuron SDK

by Hunter Carlisle (Senior SA, Annapurna ML)

ORPO is a new fine-tuning technique that performs SFT + preference alignment in one process.

Odds Ratio Preference Optimization (ORPO) paper: https://arxiv.org/pdf/2403.07691, 2024

RSVP Webinar: https://www.eventbrite.com/e/webinar-...

Zoom link: https://us02web.zoom.us/j/82308186562

Related Links

Generative AI Free Course on DeepLearning.ai: https://bit.ly/gllm

O'Reilly Book: https://www.amazon.com/Generative-AWS...

Website: https://generativeaionaws.com

Meetup: https://meetup.generativeaionaws.com

GitHub Repo: https://github.com/generative-ai-on-aws/

YouTube: https://youtube.generativeaionaws.com

![[AMV] верно, я Кира..](https://images.videosashka.com/watch/8-3v0E_Axvw)

![[WORKSHOP] Generative AI and Large Language Models: Fine-tuning with SageMaker + PEFT + RLHF + PPO](https://images.videosashka.com/watch/fLPNMleobgY)