Stanford CS25: V3 I Low-level Embodied Intelligence w/ Foundation Models

October 10, 2023

Low-level Embodied Intelligence with Foundation Models

Fei Xia, Google DeepMind

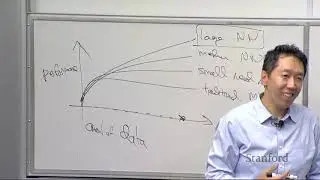

This talk introduces two novel approaches to low-level embodied intelligence through integrating large language models (LLMs) with robotics, focusing on "Language to Reward" and "Robotics Transformer-2". The former employs LLMs to generate reward code, creating a bridge between high-level language instructions and low-level robotic actions. This method allows for real-time user interaction, efficiently controlling robotic arms for various tasks and outperforming baseline methodologies. "Robotics Transformer-2" integrates advanced vision-language models with robotic control by co-fine-tuning on robotic trajectory data and extensive web-based vision-language tasks, resulting in the robust RT-2 model which exhibits strong generalization capabilities. This approach allows robots to execute untrained commands and efficiently perform multi-stage semantic reasoning tasks, exemplifying significant advancements in contextual understanding and response to user commands. These projects demonstrate that language models can extend beyond their conventional domain of high-level reasoning tasks, playing a crucial role not only in interpreting and generating instructions but also in the nuanced generation of low-level robotic actions.

More about the course can be found here: https://web.stanford.edu/class/cs25/

View the entire CS25 Transformers United playlist: • Stanford CS25 - Transformers United