NLP 3: Sequence to Sequence and Attention

In this workshop, we tackle the following things:

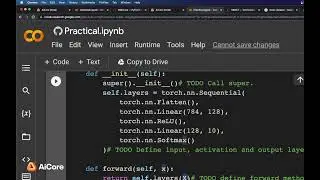

We talk about the encoder-decoder architecture and implement the Sequence to Sequence (Seq-to-Seq) model for a Natural Language Processing translation system

We discuss the shortcomings of the architecture, and introduce Attention as a way to overcome these shortcomings

We implement the Seq2Seq model with Attention.

Link to repo: https://github.com/AI-Core/NLP

Come visit http://theaicore.com for free AI training! We offer a wide variety of courses including Deep Learning, Deep Reinforcement Learning, and Natural Language Processing

Learn more: https://www.theaicore.com/